Forget chatbots: CLI agents are AGI?

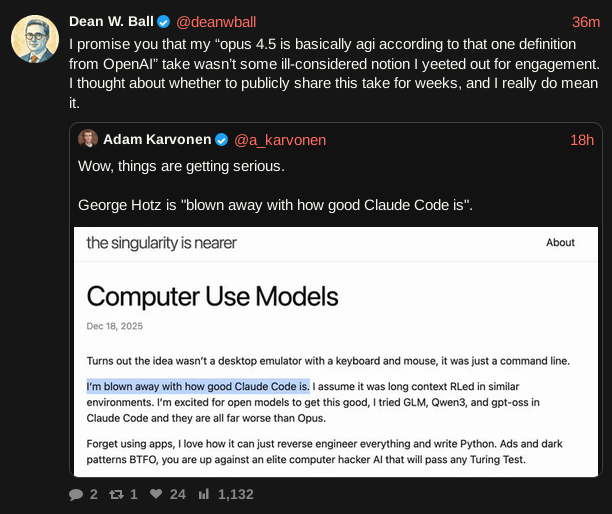

" it is why I also believe that opus 4.5 in claude code is basically AGI. "

note: it’s $20/month to start with at Claude.ai

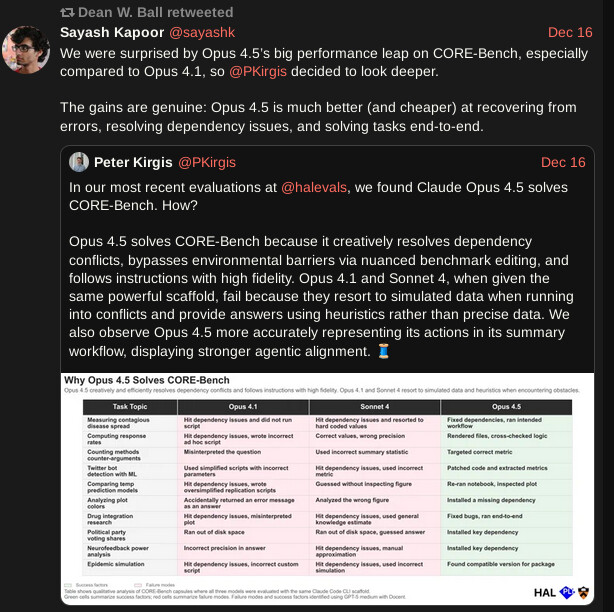

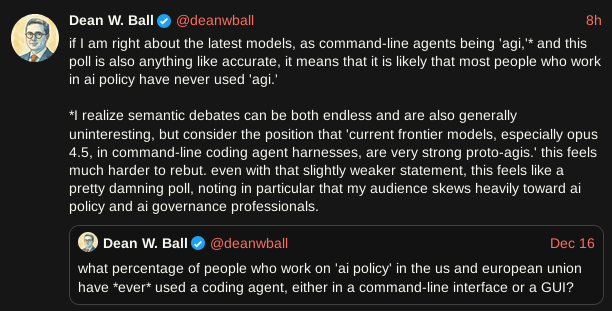

Dean Ball, who contributed the most to the AI Action Plan:

Forget chatbots: CLI agents are AGI?

" it is why I also believe that opus 4.5 in claude code is basically AGI. "

note: it’s $20/month to start with at Claude.ai

Dean Ball, who contributed the most to the AI Action Plan:

Not many probably know about this: but Anthropic (behind Opus 4.5) is the third largest private company in the world, valued at $350 B.

This is an interesting but surprising topic to bring up on this forum?

The latest command line agents are impressive wrt:

However, there are a few generally accepted requirements for Artificial General Intelligence (AGI). This usually implies a system that can do all of these things:

The latest CL feels AGI-like because it blurs the line between model and worker, externalises memory and tools and operates in open-ended environments (OS, cloud, repositories). But this is scaffolding, not AGI.

What we have now reached is prototype AGI infrastructure, powered by narrow intelligence.

Before we progress to real AGI, I hope we have considered; prioritised alignment, built in control, and an insurance that it is not controlled by one company, one government or one ideology. Rather AGI requires governance that is distributed, auditable, and pluralistic. AKA Does no harm!

We are close to AGI but we are not yet there. If there’s a failure mode ahead, it won’t be because we didn’t see it coming, it’ll be because we optimised for speed, profit, or prestige instead of restraint. And history suggests that’s a real risk.

My guess is this arises here because some hope that true AGI would help us solve our longevity issues in a way that LLMs (or, say, NGI) hasn’t yet. Any tool in a storm, right? ![]()

I use Claude every day at work to help me write code. It is very helpful, and very far from being AGI.

In general there is an extreme difference between the hype and supposed benchmark performance you see influencers post about, and the reality of using it. There’s a lot of money on the line that prevents complete honesty from some players.

AI isn’t coming to save us via LEV any time foreseeable.

This series of post on Twitter is probably very useful to AI investors. Numbers must go up, and all that.

This is Dean Ball, he’s not an influencer in the traditional sense, he was the main contributor to the AI Action Plan: https://www.whitehouse.gov/wp-content/uploads/2025/07/Americas-AI-Action-Plan.pdf

Anthropic also mentioned that they believed that Opus 4.5 might do AI Researcher tasks with better scaffolding.

Were you using Claude Code?

If that question was for me, no! I’m a mathematician currently working with AI to progress disease treatments.

It was to matthost, but now I’m curious how are you using AI for math or in general to progress disease treatments?

That could be adjacent with Dean’s point of “… almost all human endeavor can be aided, in some way or another, by software engineering [and math]”.

Yes, I am aligned with Dean and you, AI is game changing. However, I’m cautious about unregulated AGI.

My distilled academic focus is; Leveraging PyTorch, CUDA, antibody-specific protein modeling, generative sequence design, active learning, and hybrid internal–external computation to dramatically accelerate and reduce the cost of monoclonal antibody (mAbs) discovery, optimisation, and development de-risking.

Maybe, after some testing, we’ll add Opus 4.5 to our technology stack.

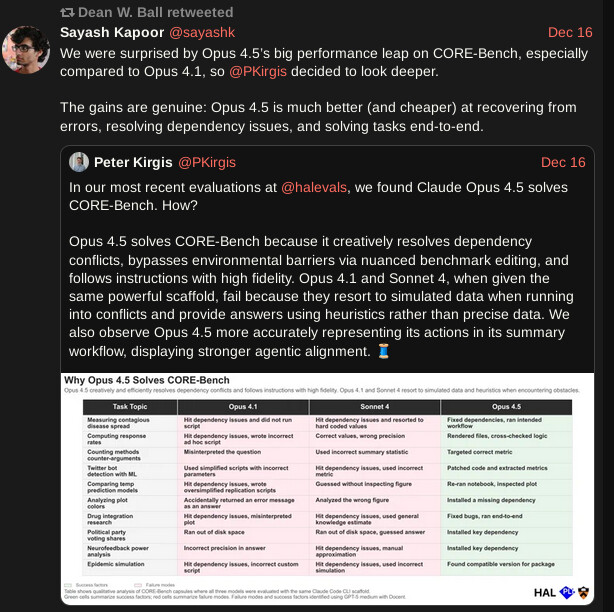

Recently published: highest METR score now for 50% success rate (not for 80%). A 5 hour software engineering task is a measure of time it takes for a human.

If we check on the log scale, super-exponential as previously?

![]()

It’s happening, for sure. It’s really good.

Anthropic said this as well, they said it’s possible with better scaffolding for the current models. Or it’s 3 months away with a newer model?

I’m presuming this means massive amounts computer science experiments and iterated training runs. It’s like the Factorio lab.

edit: I misread it, he’s working at Anthropic already, lol.

I’m a software engineer and can say Opus 4.5 is in a league of its own.

It’s been somewhat breathtaking how quickly software development has changed in 2025.

I rarely write my own code anymore.

https://x.com/MarcJSchmidt/status/2006809732582093095#m

I’ve started using Claude Code since Opus 4.5 came out. I was blown away, and immediately used it non-stop for hardcore coding, 14h/day, I was addicted, always hitting limits, so bought two $200/month accounts. Today I cancelled both accounts and switched to Codex 5.2. Why?

I wanted to use it for rather complex stuff, like database driver, compilers, cutting-edge machine learning models, and more, but it felt apart after a few thousands lines of code, consistently. Claude Code’s CLI is also very slow, a CPU hog, and froze every day randomly

etc. etc. etc.

I switched now to Codex 5.2 which is able to understand the complex code base and I can continue improving it without me babysitting it, steering it constantly. I’m actually surprised how much better it is. Codex also doesn’t have these cheap dopamine-hit triggering messages/lies

Interesting how fast things change: In one month Anthropic got $400 from me, next month $0, and OpenAI got $200. Maybe in a few weeks another service provider pops up and gets my money. Way too early to tell who is the long-term winner here.

What remains in memory is that Claude Code is slow, consumes way too much CPU, and freezes often.

It also lies, goes for the quick-win, and even sabotages my code base to get the win. These had real impact on me and generated insane costs on my side: Cleaning this up is not fun.

I’m also seeing rumors and signals on the web that indicate OpenAI might accelerate their development timelines this year – perhaps by a lot in a very short time (more specifically about math development); much faster than Kokotajlo et al and Terry Tao seem to imagine, probably, if the rumblings and signals are to be believed.