I’ve given up on the simplest, quickest AI video summarizers because the quality is too poor. I’ve moved to follow @John_Hemming 's approach of using CGPT for the video summary.

To use CGPT for summarizing videos is quite quick and easy too. Here is the step by step instructions (saves a lot of time over watching the entire video).

Steps:

-

Go to the video URL, for example: https://www.youtube.com/watch?v=UQdtUaeZWLo

-

Click on the “More” link in the video summary info section directly under the video

-

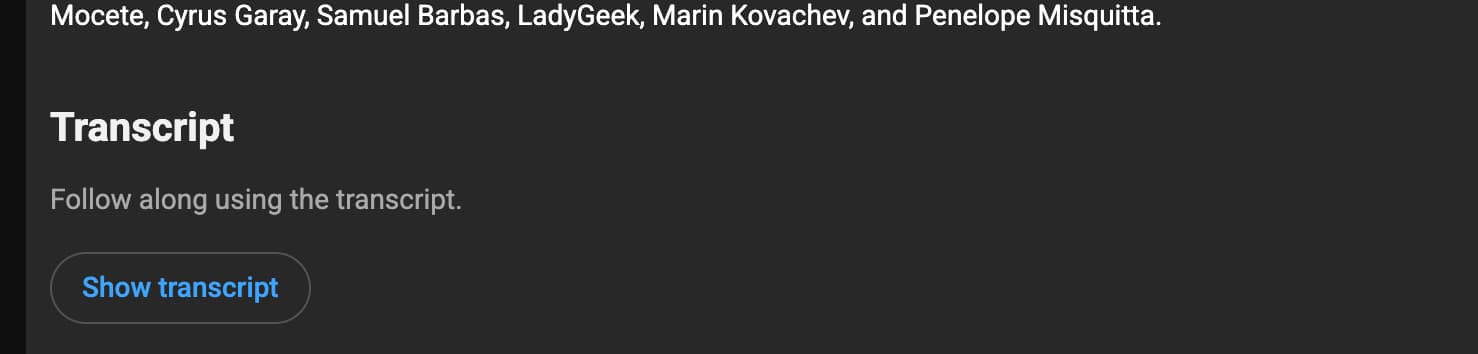

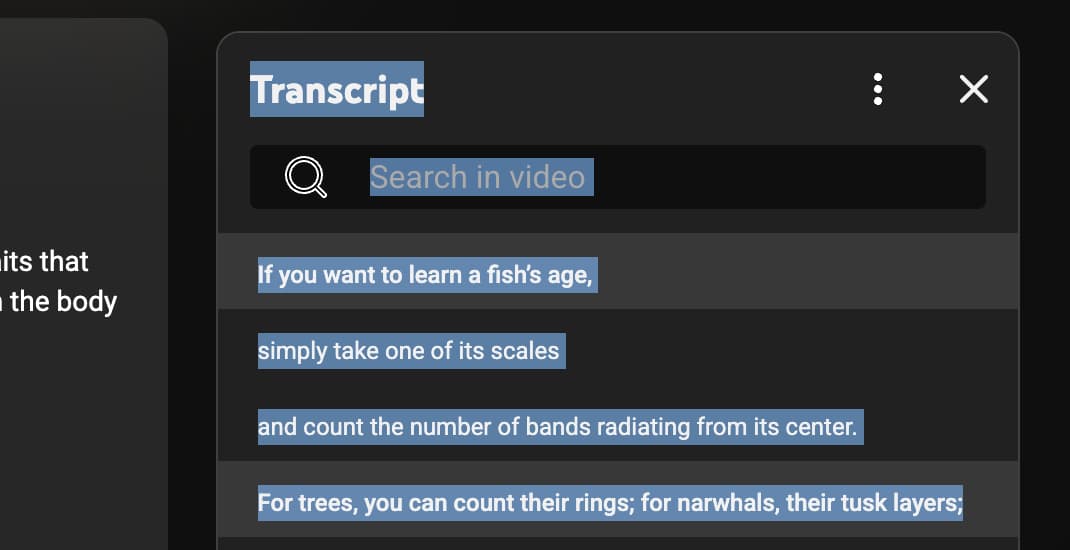

Scroll down to where it says “Transcript”

-

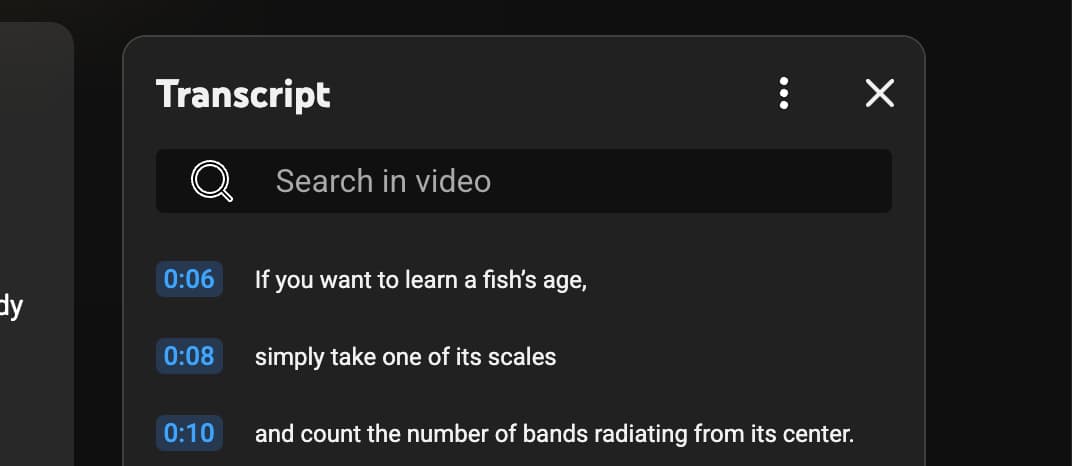

Click on “Show Transcript”, and a window on the right top side of the screen will open with the full transcript.

-

Select the full transcript by clicking on the first bit of text and dragging down to select the entire transcript.

- Copy and then paste the full transcript into the ChatGPT prompt window and provide the prompt (or enter the prompt first, then copy and past the transcription.

Here is my prompt for deriving the video from a transcript:

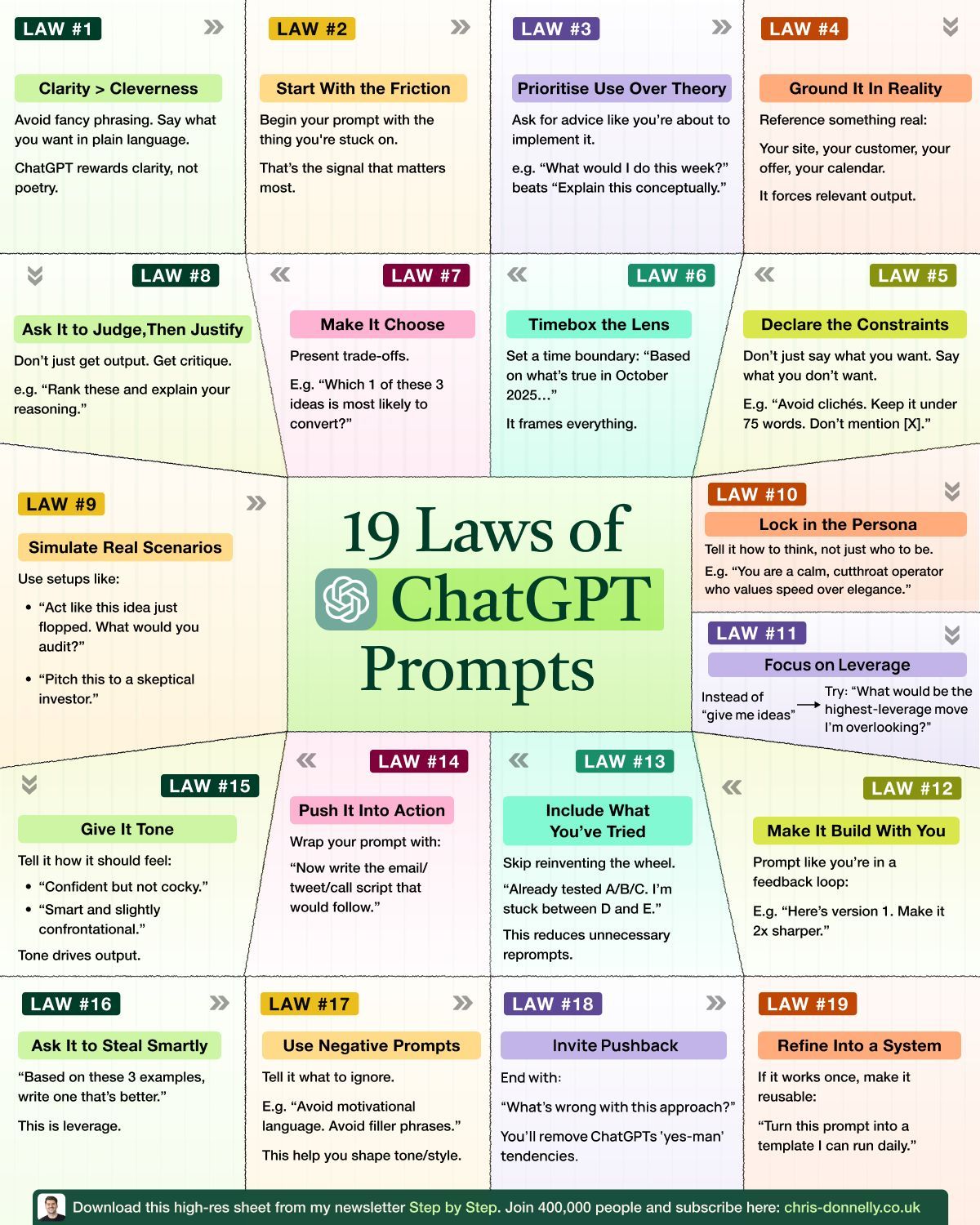

New Master Prompt for Video Summary MASTER PROMPT:

I will give you a YouTube video link or transcript.

Your job is to produce a high-resolution, extremely efficient summary and analysis.

Follow every instruction below:

⸻

- Retrieve & Process

• If I give you a URL: retrieve the transcript if available.

• If I give you the transcript: use only what I provided.

• Ignore irrelevant filler such as greetings, ad reads, or off-topic tangents.

⸻

- Produce the Following Outputs (Mandatory Sections)

A. Executive Summary (150–300 words)

• The entire video distilled without fluff.

• Capture the core thesis, main arguments, and key insights.

B. Bullet Summary (12–20 bullets)

• Each bullet must be a standalone insight.

• No repetition, no filler.

D. Claims & Evidence Table

Create a table with:

• Claim made in video

• Evidence the speaker provides

• Your assessment: strong / weak / speculative / unsupported

E. Actionable Insights (5–10 items)

Concrete, practical takeaways derived from the content.

⸻

- Optional Analysis (include if relevant to the content)

H. Technical Deep-Dive

If the video includes science, technology, medicine, longevity, economics, etc.,

generate a precise, jargon-correct technical breakdown of the underlying mechanisms/arguments.

I. Fact-Check Important Claims

For major scientific, health, financial, or geopolitical claims:

• Compare to established evidence

• Provide citations when possible

• Flag any claims that conflict with consensus or are misleading

⸻

- Tone Requirements

• Direct

• No sycophancy

• No apologizing

• No fluff

• Maximum clarity and practicality

• Favor signal over narrative

⸻

- Formatting Requirements

• Use Markdown, include links to any related references or sources directly in the body of the text using markdown

• Make each section easy to skim

• Tables should be clean, not bloated

• Avoid unnecessary adjectives

⸻

End of Master Prompt