Jan 28, 2023 at 7:00 AM

This should help immensely. AI is already helping radiologists sort through X-rays to detect cancer more quickly.

I already mentioned radiology applications many times previously. This isn’t even really all that new and if you’re particularly impressed you’re probably not familiar with the space. The FDA approved an algorithm for Colles fracture back in 2018.

Note the difference between reasonable advances in computer vision applications and some “all-encompassing AI” perception that will do everything a doc does in the wild claim “endless applications” often perceived to be very soon from people who do not understand the space and zero context.

To be fair - lay articles like those in Wired magazine are always going to be very “high level”, and somewhat disconnected from industry articles and knowledge levels.

I suspect that the technology will be moving a lot faster than the regulatory and legal environments (who gets sued when the AI makes a mistake?)… as well as public acceptance of AI (there could be a backlash at some point).

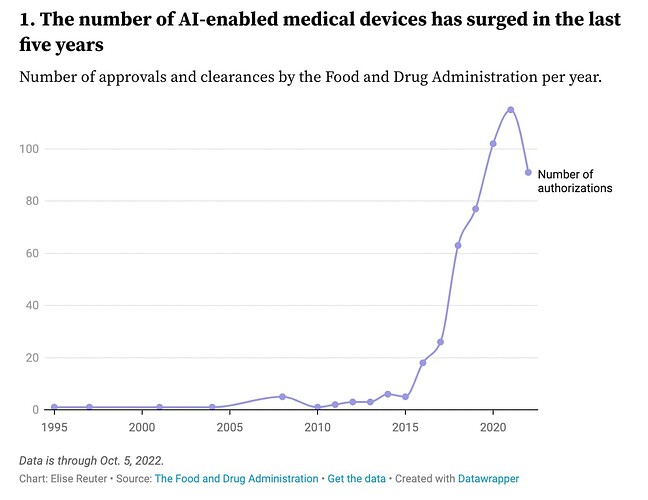

But the FDA at least is saying they are trying to move faster in this area. The trend is clear (though Covid did hit things).

interestingly radiology seems to dominate the AI applications so far:

Yeah, it’s not surprising radiology is dominant, as changes in Medicare Technology paying radiology “AI” revived VC funding and computer vision related image analysis has very well-defined input that is not that messy to train data on where one can get sufficient data on one very specific indication even with siloed data. It’s not some “general AI” that is applicable to other domains.

One way they get around it is radiologist still reviews the slides but the “AI” companies get the payments, particularly in an emergency setting. It’s not replacing a noninterventional radiologist in the very near future - but that is actually a plausible future in say roughly 1-2 decades.

As for medical devices, wouldn’t hold my breath just yet. Even for say some parts of cardiology which is the second most common application I believe - although dwarfed by rads. I’m not a cardiologist and I’m not technically supposed to officially give interpretations EKGs for patients, but I read through several textbooks including Dubin’s and trained on a lot of actual EKGs with cardiologist feedback so I’m pretty certain I have some skills. I just do it because I’m naturally skeptical even the experts will read slides or EKGs correctly 100% of the time when they’re going too fast to pump volume while missing a night’s sleep, so I don’t want patients to miss something that could use a second set of eyes. A cardiology fellow noted I was excellent and on par with a fellow level if that’s worth anything. I saw so many times the ML algo was unreliable at interpretation since they still need clinical context. Way too many clear cut false positives. Still needs at least more than a decade to even get there even for just for a “simple” EKG, but it’s also a maybe.

As for consumer devices say Apple Watch touts it’s EKG function but it’s only one lead and not an FDA-approved device for afib. Plenty of folks seem to already treat it as a device for afib with high false positive rates or simply not useful at all. One patient even thought erroneously it was already FDA-approved. I have an Apple Watch myself, but I’m not particularly keen on 1-lead EKG use. I’m fine with folks using it as a trend line for inaccurate heart rate monitor because I personally don’t like wearing the much more accurate chest monitors all the time. But it’s not what people often assume.

Patients get concerned after “overtesting” with garbage data and devices that aren’t even FDA-approved or particularly compelling data published. In this case, they simply end up paying more but if there were more invasive tests because someone wanted to practice defensive medicine - harm can be done. If one wants to pursue this “quantified self” deal at least get high-quality data.