https://x.com/AviBittMD/status/1928200053312868795#m

Oh yeah, it was definitely having problems before, and it’s just getting worse and worse now.

IMO, we need to switch the entire model towards pre-registered experiments. Imagine this:

It would speed things up. It would cut down the amount of time wasted in submitting, revising, resubmitting to different journals. It would stop reviewers from moving goalposts. It also takes away incentives to P-hack, to fiddle or fabricate the data, change the analyses to suit the findings you want etc etc. Scientists would get recognition, kudos etc for their good work, without having to hope and pray that the study turns out positive and significant.

The difficulty, of course, is that of publication being limited to certain results (journals would not so much wish to publish negative results even if they should). I think probably the role of the journal needs to change and instead have peer groups as associations that enable a publication to progress from a credibility perspective.

The idea of a centralised shift I think is not practical. In the end pre-prints may dominate to the extent that often things are not actually “published” beyond the pre-print.

I may be naive, but I think the current system only exists because the journals are driven by citations. They think that positive/successful/significant studies get citations, so they only accept those papers. It’s crazy that you can have a well-performed experiment, there’s no significant difference, and it’s essentially un-publishable. That system only incentivises scientists to fudge numbers until something works, which is exactly what happens.

Under my model, they would be publishing pre-vetted, good, science, and negative results can also be massively important. And their reputation may be boosted because there wouldn’t be so much BS, manipulated studies, dodgy analyses, and publication bias.

At the university level, pre-prints are absolutely worthless. For my promotion and future career, my university only cares about papers published in Scopus-indexed journals, preferably in the top whatever %. Even with the “DORA” stuff, it still matters. Same for many funding agencies. So currently, I could do amazing science, put out pre-prints, and in 2-3 years I have no grant money to do anything.

I accept all of that. It probably needs to start any change at the funding process.

Graph with life expectancy per dollar spent, per capita, is not healthcare system related, otherwise they need a robust analysis showing that it is. It’s drug overdoses, obesity, and firearms related. What is modulated by the healthcare system the U.S is actually doing better (like cancer screening).

Disease increase can be because of changing criteria, more screening, less stigma, etc, which are artifacts and not real increases.The only organic increase in chronic disease is obesity and obesity-related. What’s artifacts are things like increases in autism, ADHD, and celiac.

The solutions the report present for artifacts or organic increases of disease are either not presented, quite poor, or take solutions off the table that will actually work.

We have solution for the real problem, which is obesity (food has become like crack), and not only has RFK Jr. taken it off the table, he has discouraged it.

40% having a chronic disease is an error, it’s 37.5% in the source they cited, it’s even explicit.

The increases in disease among children is not obesity adjusted.

Diabetes prevalence increases with increase in obesity over time, but asthma doesn’t.

It’s true childhood obesity is increasing and is a problem, and diabetes is also increasing, which is as expected because obesity is.

They are presenting a “crisis of autism”, and you might have some ideas why they have the inclination to want to present this, as an “organic crisis”… ![]() cough “vaccine cause autism”

cough “vaccine cause autism”

Diagnosis are increasing, but as an organic increase, Avi disagrees.

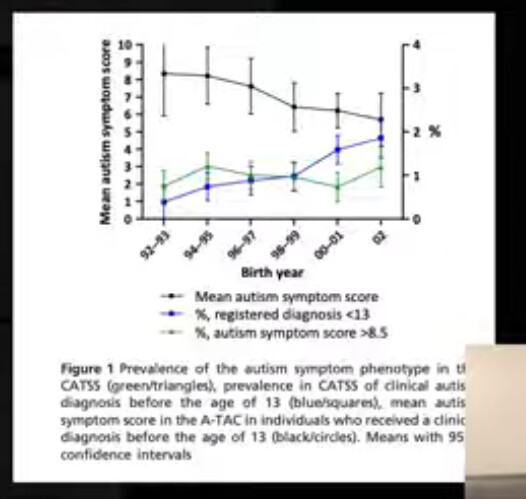

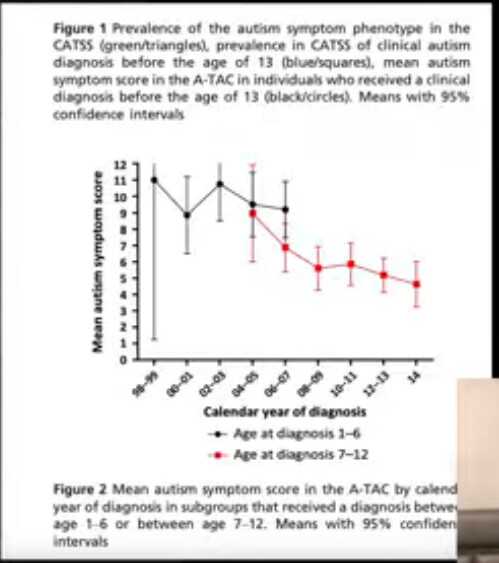

Graph shows that different diagnostic changes happen simultaneously as increase in prevalence over time occurs. It was very hard to receive an autism diagnosis in the past (behaviors associated with both low and high IQ were required). It was diagnosed as e.g schizophrenia instead. It was inappropriately strict in the past. Recent DSM-5 has decreased new diagnosis.

If the underlying criteria for a diagnosis stays the same over time, but the diagnosis prevalence increases, that means it’s an artifact. (e.g BMI staying the same, but obesity increasing). If there’s a corresponding increase for the underlying symptoms of autism with the prevalence of diagnosis the rates are increasing organically and is not an artifact.

Data for the symptoms of autism over time doesn’t exist for the U.S, but for other countries it does, and the symptoms are flat over time from 1993-. It’s very strong evidence it’s an artifact.

Autism symptoms may have even decreased over time.

It does not mean that it’s overdiagnosed, it can be a good thing, but it does not need any solution.

Similar pattern for ADHD, there’s a counter hypothesis, plausible mechanisms, and evidence for it. The burden is on them to show that it’s not an artifact.

For cancer… we have become better at screening, detection, imaging, blood tests, diagnosis, technology overall. Childhood cancer all-cause mortality and and deaths have decreased over time.

It’s WILD that they are this incompetent and pushing this to the general public and policy.

Depression and anxiety increases are not artifacts, and Avi agrees, but RFK does not offer any good solutions, and might actually suggest viable solutions as problems (although not as bad as with obesity).

Some allergies are organic increases (not celiac), and not artifactual, and increase in atopic dermatitis is real because more severe AD is increasing.

It’s a success of the healthcare system to diagnose more celiac disease, there’s nothing here, we’re just doing a good job. This is a monstrosity of a report. Catching more cancer in stage 1 is a good thing. You can frame it like there’s more people getting cancer. Dangerously misleading.

So what’s causing the increase in childhood chronic disease? (Most of the increases aren’t real at all, and is evidence of things going well - us improving). Obesity we already knew we should talk about, but RFK’s solutions are trash.

Not the “CHEMICALS!”, and “MEDICATIONS!”

Interesting that saturated fat is presented in a negative connotation in the report, for all the KETO’s out there who worship RFK.

Yes UPF’s are designed to increase caloric intake, food companies do that to sell product. UPF’s as a heuristic are bad, not as a rule, protein powder is classified as UPF but it’s a healthy food. A better heuristic is hyperpalatable food, not UPF. Typically a combination of salt, sugar, and fat, which are added to make a food hyperpalatable and increase caloric intake. It overrides satiety mechanisms for whatever reason.

You can make something hyperpalatable without it being UPF, you can take a steak and put salt and sugar on it, and add a little fat, and make it hyperpalatable. It will induce overeating but not be UPF.

The chemicals argument (including microplastics), is unpersuasive and very weak.

Pervasive technology use argument is reasonable. But sloppy argumentation by moving from children to young adults, without a specific good inference.

MAHA report claims that effect of long term ADHD medication use converge without using it with behavioral modification, but they got to do whatever they wanted later in the study, and medication was used in the behavioral modification group.

The conclusion from the study they cited itself: “It would be incorrect to conclude from these results that treatment makes no difference or is not worth pursuing” They say it would only work long term if they actually took the medication.

This is a government report, that has been completely botched. The study is represented in the exact opposite way. The conclusion is that it works, if you stop taking it it doesn’t work, no shit, what is presented “that it doesn’t work long term”. Complete misrepresentation of the study.

I don’t know if they didn’t read it, if they had an AI read it, but this is really bad. This is gross incompetence.

Anti-depressant use vs. psychotherapy is a false dichotomy, they aren’t mutually exclusive, and anti-depressants aren’t off the table since depression rates are increasing. Some of these aren’t in-depth empirical debunks, just basic philosophy.

Off-label is not inappropriate use, including for antipsychotics in children, it could be because there wasn’t enough money to get FDA approved for that condition (e.g because it went generic). FDA approval requires RCT’s, and not e.g cohort studies showing benefits. We prescribe off-label medications all the time for good reason.

They made an error with the number with regards to “unnecessary” antibiotic use, was it an LLM that at the time couldn’t calculate the correct number? (They consistently make errors in favor of their position). It’s less than 30%, not 35%.

Yes there’s an association with antibiotic use and conditions, but it is also true that getting infected is also associated with different conditions. This needs to be addressed. For example, if someone had polio, and it was treated with antibiotics, then RFK comes along and say “wow, if you treat these people with antibiotics, they get paralyzed” Not adjusting for antibiotic indications which may cause the chronic health outcome.

If someone has sepsis, we treat them with antibiotic, and sometimes it is false positive. It is damaging to not treat, it is expected. You have to make the case that less than 30% unnecessary antibiotic use is bad. We don’t have the perfect knowledge to make it 0%. Stack that up against the risk of erroneously not treating an infection

The report is full of this: “increase by X”, big number, but not where numbers are coming from and if it’s bad or good.

Conflict of interest “corporate capture” is not a flat out reason to dismiss evidence, in fact, in many cases it’s the only way you’re going to get evidence. Motivated study design is good, it’s not inappropriate, because it allows for the statistical power design to find effects that a non-biased research might miss (their money is on the line). Lies, damn lies, and statistics and such are not good, however. Which is why you look at the methodology and the results. When research funded by industry is more positive to industry, it can be because of good reasons, like making sure to study something with adequate power to find effects, like some grant researcher might’ve overlooked. It’s not necessarily malicious.

RFK has not articulated whether the funding sourced results goes in alignment with them is malignant or benign. The same can be said for a food that RFK likes to defend which is red meat. Either industry will fund it or you need another solution, or that the research will not be done, will you socialize the research? Taxpayers pay it? All pharma research should be funded by taxpayers? At least $100 B/yr from taxes would be needed.

Next part of the debunk will start at “A Closer Look at Ultra-Processed foods” of the report.

IMO, I’d be ok with that. We seem to be able to find infinite money for other, less important (IMO), things. If we could answer massive health questions in a truly independent, unbiased, way - hell yes.

As for autism, the recent guest on Peter Attia’s podcast said that the rise is real/organic, and it’s not just an artefact of changing the diagnostic criteria. And she actually did mention potential reasons such as chemicals, plastics, and also more “boring” reasons such as increasing parental age.

She doesn’t present any data? So why would you believe it? (The dotted lines on the Y axis is DSM etc changes) The video quality is better:

Stable symptoms over time from data outside the U.S (as such data is not available):

Severity has decreased over time, but diagnosis has gone up:

The evidence presented in the debunk suggest no change or even decrease in autism over time.

There’s literally no reason for that. Researchers being biased can lead to more effective study design that actually find something, rather than a researcher on a grant that doesn’t care as much, as mentioned. A corporation that has a lot of money on the line will (1) select the best outcome (2) adequately power for it, based on the pre-clinical studies they have done. Equally, if lies were made you can look at the methodology and the results and there’s no problem.

That biased research again, more often reports positive results in favor of that bias can simply be because of the better study design.

But sure, if people are up to increase NIH budget by 3x to do all research in-house, sure, even if it also might be less effective use of money.

A little history and my opinion:

While the word “autism” has been around longer than I have been alive, I don’t think the average medical GP was aware of it, and indeed probably didn’t have the education needed to diagnose it.

When I was young, unlike today, you had to have had something very serious indeed before your GP sent you to a specialist.

Today, my PCP GP seems only to be there to prescribe some bloodwork and then send me to a “specialist.”

So, yes, many diseases, especially mental ones, were seriously underreported.

Even if a GP did diagnose something like autism, how likely was it to be reported?

There was no internet or intranet, no computers outside of government and academia.

So, at the end of each day or week, do you think all GPs were filling out paperwork and sending it to the CDC listing every patient’s diagnosis?

Even today, do you think every diagnosis from flu to xenophobia gets reported?

We don’t have an accurate number for many diseases because they are still underreported.

New and final report (it seems):

Hmmm…So the government’s plan is to tell people to exercise, lose weight, eat healthy and take fewer meds. Well 3 out of 4 isn’t bad, but don’t all Americans know this already? How do you raise American’s awareness of these things that should be as well known as eat daily, drink water and breathe?

What a waste of time…

It’s like the advice from a financial planner, that he recommends becoming richer, because in his experience being rich puts you in a better financial situation than being poor. Very true, and that’s why you pay the advisor the big bucks (that way he’s taking his own advice, thank you).

Reminds me of an old joke. A retired person with a tiny pension comes to the administrator in charge of pension adjustments, and says: “Sir, I can barely walk, I have not eaten for a whole week”, to which the official responds “well, you’re just gonna have to force yourself to eat”.

RFKJr is really earning his pay. It’s that famous cost cutting and making government more efficient. Free sensible advice that costs government less money and everyone is happy.

GLP1 agonists solve this.

GLP1-RAs are a short-term solution to a problem which is entirely manufactured and shouldn’t exist in the first place. In the pursuit of profits for some companies, and some convenience, we’ve created a toxic atmosphere/environment to live. Now we’re looking at other companies to sell us a solution to the problem. It’s pretty ridiculous really, and the loser is the average person.

(Note, when I say “environment” I mean the food environment, dependence on vehicles, poor health education, rife misinformation, lack of trust in authorities and everything else.) For example, why are school children still routinely eating chicken nuggets, fries and fizzy drinks in school?

If this government is actually as brave and renegade as they would like us to believe, they could start by legislating a lot of common sense things; a sugar tax, coherent food labelling, a ban on junk food advertising, better health education in school, fixing the garbage which is fed to kids in schools every day etc. That is low-hanging fruit and could vastly improve long-term health outcomes. That said, it’s the same government which just ended US Aid which was on the verge of actually eradicating AIDS, so whether they actually care about health outcomes is questionable.

Yea I hate it. Immense willpower is needed to even care enough to educate yourself on how to walk through this minefield.

I guess education + GLP1RA is the answer.

I’m yet to see politicians that aren’t almost all talk. We need to save ourselves.

Those are evil drugs invented by evil pharma lizards.

There is an interesting question here as to whether if someone has once either been a candidate for elected office or an elected official then one becomes a politician and remains a politician after leaving office (or having stopped standing for election).