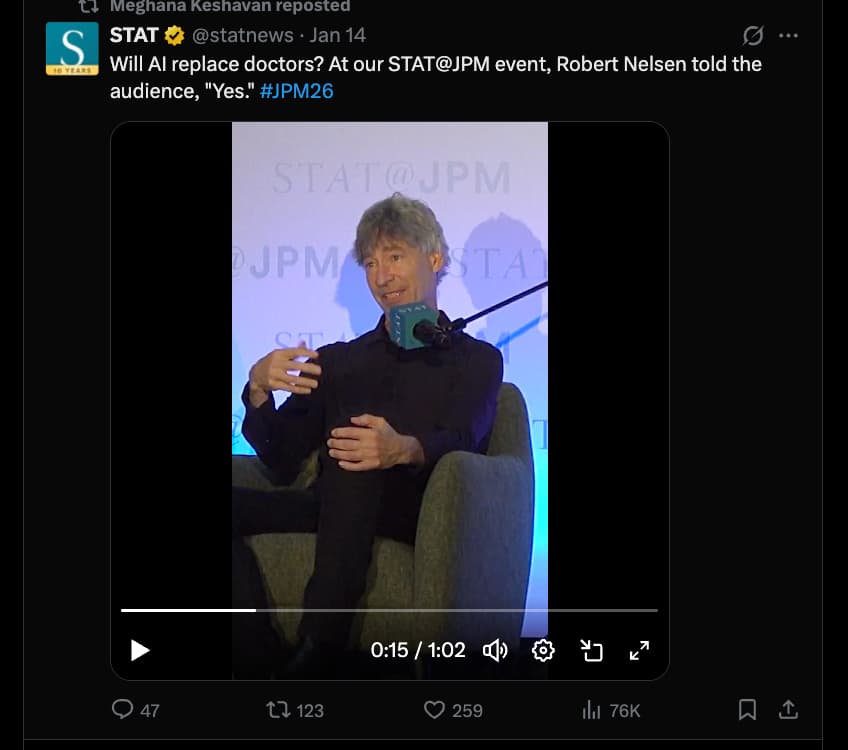

Will AI replace doctors? At our STAT@JPM event, Robert Nelsen told the audience, "Yes.

I encourage you to Listen to his discussion here: https://x.com/statnews/status/2011573799620067564

Then, Robert responded on X to that post and his video:

Sorry folks. Most (not all) doctors (and most later stage venture capitalists) will be replaced by AI, and robots. It is all about getting the AI the right information, which will be new longitudinal data streams, behavior patterns, proteomics, regular sequencing, imaging, etc. most of that can be done by less skilled workers, and eventually robots. I come from a family of docs. Love them dearly. Most docs are trained that they are right, not to acknowledge mistakes because of liability, and it is super clear AI is already better than most docs, includimg academic docs, when provided accurate data. So in months and years it will be a cake walk. 5 years ago, I said my nanny and an AI will be better than most docs, and I stand by that. Maybe in 5-15 years, we need 1/50th the amount of docs, and more low skilled data gatherers, and robots. We will need nurses longer, but robots will enable nurses to be much more productive. For the developing world, it will all be about access and getting data into a phone, as the best doctor in the will be AI. It is hardest for doctors to imagine these changes because the have been taught that they are right, and avoid negative data as a class, and their social status, even their title (try calling them by their name) is so deeply tied together. So they become refractory (see comments, lol). In the interim, the smarter docs will embrace the change, and it will make them better.

Source: https://x.com/rtnarch/status/2012242455286984957?s=20

More on Robert Nelson:

For This Venture Capitalist, Research on Aging Is Personal; ‘Bob Has a Big Fear of Death’

Robert Nelsen has invested hundreds of millions in Altos Labs, a biotech company working on ways to rejuvenate cells and eliminate disease

The investment firm Robert Nelsen co-founded in 1986, Arch Venture Partners, has racked up billions in profits from early stakes in companies developing methods to detect and treat cancer and other diseases.

In his personal life, Nelsen, 60 years old, downs a daily cocktail of almost a dozen different drugs, including rapamycin, metformin, taurine and nicotinamide mononucleotide, all of which he says help prevent illness and promote longevity. Nelsen has a full-body MRI every six months, sees a dermatologist every three months and has annual blood tests to detect cancer. At his home in the Rocky Mountains, he works out in an “electric suit” that he says emits low-frequency impulses to build muscle and improve health.

“I know I will get cancer, I just want to catch it early,” says Nelsen, who says an MRI several years ago has already identified thyroid cancer at an early stage. He has seen family members die of the disease.

“Bob has a big fear of death,” says his wife, Ellyn Hennecke.

Read the full story: For This Venture Capitalist, Research on Aging Is Personal; ‘Bob Has a Big Fear of Death’ (WSJ)